Chapter 4 Determinants

DETERMINANTS

4.1 Introduction

In the previous chapter, we have studied about matrices and algebra of matrices. We have also learnt that a system of algebraic equations can be expressed in the form of matrices. This means, a system of linear equations like

$ \begin{aligned} & a_1 x+b_1 y=c_1 \\ & a_2 x+b_2 y=c_2 \end{aligned} $

can be represented as $\begin{vmatrix}a_1 & b_1 \\ a_2 & b_2\end{vmatrix}\begin{vmatrix}x \\ y\end{vmatrix}=\begin{vmatrix}c_1 \\ c_2\end{vmatrix}$. Now, this system of equations has a unique solution or not, is determined by the number $a_1 b_2-a_2 b_1$. (Recall that if $\frac{a_1}{a_2} \neq \frac{b_1}{b_2}$ or, $a_1 b_2-a_2 b_1 \neq 0$, then the system of linear

P.S. Laplace

$(1749-1827)$ equations has a unique solution). The number $a_1 b_2-a_2 b_1$ which determines uniqueness of solution is associated with the matrix $A=\begin{vmatrix}a_1 & b_1 \\ a_2 & b_2\end{vmatrix}$ and is called the determinant of A or det A. Determinants have wide applications in Engineering, Science, Economics, Social Science, etc.

In this chapter, we shall study determinants up to order three only with real entries. Also, we will study various properties of determinants, minors, cofactors and applications of determinants in finding the area of a triangle, adjoint and inverse of a square matrix, consistency and inconsistency of system of linear equations and solution of linear equations in two or three variables using inverse of a matrix.

4.2 Determinant

To every square matrix $A=[a _{i j}]$ of order $n$, we can associate a number (real or complex) called determinant of the square matrix A, where $a _{i j}=(i, j)^{\text{th }}$ element of A.

This may be thought of as a function which associates each square matrix with a unique number (real or complex). If $M$ is the set of square matrices, $K$ is the set of numbers (real or complex) and $f: M \to K$ is defined by $f(A)=k$, where $A \in M$ and $k \in K$, then $f(A)$ is called the determinant of $A$. It is also denoted by $|A|$ or $det A$ or $\Delta$.

$ \text{ If } A=\begin{vmatrix} a & b \\ c & d \end{vmatrix} \text{, then determinant of } A \text{ is written as }|A|=\begin{vmatrix} a & b \\ c & d \end{vmatrix}=det(A) $

Remarks

(i) For matrix A, $|A|$ is read as determinant of $A$ and not modulus of $A$.

(ii) Only square matrices have determinants.

4.2.1 Determinant of a matrix of order one

Let $A=[a]$ be the matrix of order 1, then determinant of $A$ is defined to be equal to $a$

4.2.2 Determinant of a matrix of order two

Let

$ A=\begin{vmatrix} a _{11} & a _{12} \\ a _{21} & a _{22} \end{vmatrix} \text{ be a matrix of order } 2 \times 2, $

then the determinant of $A$ is defined as:

$ det(A)=|A|=\Delta=\begin{vmatrix} a _{11} & & a _{12} \\ a _{21} & & a _{22} \end{vmatrix}=a _{11} a _{22}-a _{21} a _{12} $

4.2.3 Determinant of a matrix of order $3 \times 3$

Determinant of a matrix of order three can be determined by expressing it in terms of second order determinants. This is known as expansion of a determinant along a row (or a column). There are six ways of expanding a determinant of order

3 corresponding to each of three rows $(R_1, R_2.$ and $.R_3)$ and three columns $(C_1, C_2.$ and $C_3)$ giving the same value as shown below.

Consider the determinant of square matrix $A=[a _{i j}] _{3 \times 3}$

i.e.,

$ |A|=\begin{vmatrix} a _{11} & a _{12} & a _{13} \\ a _{21} & a _{22} & a _{23} \\ a _{31} & a _{32} & a _{33} \end{vmatrix} $

Expansion along first Row $(\mathbf{R} _1)$

Step 1 Multiply first element $ a _ {11}$ of $\mathbf{R} _ {1}$ by $(-1)^{(1+1)}[(-1)^{.\text{sum of suffixes in } a _ {11}}.$ and with the second order determinant obtained by deleting the elements of first row $(R_1)$ and first column $(C _ {1})$ of $|A|$ as $a _ {11}$ lies in $ R _ {1} $ and $ C _ {1} $,

i.e.,

$ (-1)^{1+1} a _{11}\begin{vmatrix} a _{22} & a _{23} \\ a _{32} & a _{33} \end{vmatrix} $

Step 2 Multiply 2 nd element $a _{12}$ of $R_1$ by $(-1)^{1+2}[(-1)^{\text{sum of suffixes in } a _{12}}]$ and the second order determinant obtained by deleting elements of first row $(R_1)$ and 2 nd column $(C_2)$ of $|A|$ as $a _{12}$ lies in $R_1$ and $C_2$,

i.e., $\quad(-1)^{1+2} a _{12}\begin{vmatrix}a _{21} & a _{23} \\ a _{31} & a _{33}\end{vmatrix}$

Step 3 Multiply third element $a _{13}$ of $R_1$ by $(-1)^{1+3}[(-1)^{\text{sum of suffixes in } a _{13}}]$ and the second order determinant obtained by deleting elements of first row $(R_1)$ and third column $(C_3)$ of $|A|$ as $a _{13}$ lies in $R_1$ and $C_3$,

i.e., $\quad(-1)^{1+3} a _{13}\begin{vmatrix}a _{21} & a _{22} \\ a _{31} & a _{32}\end{vmatrix}$

Step 4 Now the expansion of determinant of A, that is, $|A|$ written as sum of all three terms obtained in steps 1,2 and 3 above is given by

$ \begin{aligned} det A= & |A|=(-1)^{1+1} a _{11}\begin{vmatrix} a _{22} & a _{23} \\ a _{32} & a _{33} \end{vmatrix}+(-1)^{1+2} a _{12}\begin{vmatrix} a _{21} & a _{23} \\ a _{31} & a _{33} \end{vmatrix} \\ & +(-1)^{1+3} a _{13}\begin{vmatrix} a _{21} & a _{22} \\ a _{31} & a _{32} \end{vmatrix} \\ |A|= & a _{11}(a _{22} a _{33}-a _{32} a _{23})-a _{12}(a _{21} a _{33}-a _{31} a _{23}) \\ & +a _{13}(a _{21} a _{32}-a _{31} a _{22}) \end{aligned} $

or

$ \begin{aligned} |A|= & a _{11} a _{22} a _{33}-a _{11} a _{32} a _{23}-a _{12} a _{21} a _{33}+a _{12} a _{31} a _{23}+a _{13} a _{21} a _{32} \\ & -a _{13} a _{31} a _{22} \end{aligned} $

Note We shall apply all four steps together.

Expansion along second row $(\mathbf{R} _2)$

$ |A|=\begin{vmatrix} a_ {11} & a_ {12} & a_ {13} \\ a_ {21} & a_ {22} & a_ {23} \\ a_ {31} & a_ {32} & a_ {33} \end{vmatrix} $

Expanding along $R_2$, we get

$ \begin{aligned} |A|= & (-1)^{2+1} a _{21}\begin{vmatrix} a _{12} & a _{13} \\ a _{32} & a _{33} \end{vmatrix}+(-1)^{2+2} a _{22}\begin{vmatrix} a _{11} & a _{13} \\ a _{31} & a _{33} \end{vmatrix} \\ & +(-1)^{2+3} a _{23}\begin{vmatrix} a _{11} & a _{12} \\ a _{31} & a _{32} \end{vmatrix} \\ = & -a _{21}(a _{12} a _{33}-a _{32} a _{13})+a _{22}(a _{11} a _{33}-a _{31} a _{13}) \\ & -a _{23}(a _{11} a _{32}-a _{31} a _{12}) \\ |A|= & -a _{21} a _{12} a _{33}+a _{21} a _{32} a _{13}+a _{22} a _{11} a _{33}-a _{22} a _{31} a _{13}-a _{23} a _{11} a _{32} \\ & +a _{23} a _{31} a _{12} \\ = & a _{11} a _{22} a _{33}-a _{11} a _{23} a _{32}-a _{12} a _{21} a _{33}+a _{12} a _{23} a _{31}+a _{13} a _{21} a _{32} \\ & -a _{13} a _{31} a _{22} \end{aligned} $

Expansion along first Column $(C_1)$

$ |A|=\begin{vmatrix} a _{11} & a _{12} & a _{13} \\ a _{21} & a _{22} & a _{23} \\ a _{31} & a _{32} & a _{33} \end{vmatrix} $

By expanding along $C_1$, we get

$ \begin{aligned} |A|= & a _{11}(-1)^{1+1}\begin{vmatrix} a _{22} & a _{23} \\ a _{32} & a _{33} \end{vmatrix}+a _{21}(-1)^{2+1}\begin{vmatrix} a _{12} & a _{13} \\ a _{32} & a _{33} \end{vmatrix} \\ & +a _{31}(-1)^{3+1}\begin{vmatrix} a _{12} & a _{13} \\ a _{22} & a _{23} \end{vmatrix} \\ = & a _{11}(a _{22} a _{33}-a _{23} a _{32})-a _{21}(a _{12} a _{33}-a _{13} a _{32})+a _{31}(a _{12} a _{23}-a _{13} a _{22}) \end{aligned} $

$ \begin{aligned} |A|= & a _{11} a _{22} a _{33}-a _{11} a _{23} a _{32}-a _{21} a _{12} a _{33}+a _{21} a _{13} a _{32}+a _{31} a _{12} a _{23} \\ & -a _{31} a _{13} a _{22} \\ = & a _{11} a _{22} a _{33}-a _{11} a _{23} a _{32}-a _{12} a _{21} a _{33}+a _{12} a _{23} a _{31}+a _{13} a _{21} a _{32} \\ & -a _{13} a _{31} a _{22} \end{aligned} $

Clearly, values of $|A|$ in (1), (2) and (3) are equal. It is left as an exercise to the reader to verify that the values of $|A|$ by expanding along $R_3, C_2$ and $C_3$ are equal to the value of $|A|$ obtained in (1), (2) or (3).

Hence, expanding a determinant along any row or column gives same value.

Remarks

(i) For easier calculations, we shall expand the determinant along that row or column which contains maximum number of zeros.

(ii) While expanding, instead of multiplying by $(-1)^{i+j}$, we can multiply by +1 or -1 according as $(i+j)$ is even or odd.

(iii) Let $A=\begin{vmatrix}2 & 2 \\ 4 & 0\end{vmatrix}$ and $B=\begin{vmatrix}1 & 1 \\ 2 & 0\end{vmatrix}$. Then, it is easy to verify that $A=2 B$. Also $|A|=0-8=-8$ and $|B|=0-2=-2$.

Observe that, $|A|=4(-2)=2^{2}|B|$ or $|A|=2^{n}|B|$, where $n=2$ is the order of square matrices $A$ and $B$.

In general, if $A=k B$ where $A$ and $B$ are square matrices of order $n$, then $|A|=k^{n}$ $|B|$, where $n=1,2,3$

3. Properties of Determinants

In the previous section, we have learnt how to expand the determinants. In this section, we will study some properties of determinants which simplifies its evaluation by obtaining maximum number of zeros in a row or a column. These properties are true for determinants of any order. However, we shall restrict ourselves upto determinants of order 3 only.

Property 1 The value of the determinant remains unchanged if its rows and columns are interchanged.

Verification Let $\Delta=\left|\begin{array}{lll}a_1 & a_2 & a_3 \\ b_1 & b_2 & b_3 \\ c_1 & c_2 & c_3\end{array}\right|$ Expanding along first row, we get $$ \begin{aligned} \Delta & =a_1\left|\begin{array}{ll} b_2 & b_3 \\ c_2 & c_3 \end{array}\right|-a_2\left|\begin{array}{ll} b_1 & b_3 \\ c_1 & c_3 \end{array}\right|+a_3\left|\begin{array}{ll} b_1 & b_2 \\ c_1 & c_2 \end{array}\right| \\ & =a_1\left(b_2 c_3-b_3 c_2\right)-a_2\left(b_1 c_3-b_3 c_1\right)+a_3\left(b_1 c_2-b_2 c_1\right) \end{aligned} $$

By interchanging the rows and columns of $\Delta$, we get the determinant $$ \Delta_1=\left|\begin{array}{lll} a_1 & b_1 & c_1 \\ a_2 & b_2 & c_2 \\ a_3 & b_3 & c_3 \end{array}\right| $$

Expanding $\Delta_1$ along first column, we get $$ \Delta_1=a_1\left(b_2 c_3-c_2 b_3\right)-a_2\left(b_1 c_3-b_3 c_1\right)+a_3\left(b_1 c_2-b_2 c_1\right) $$

Hence $\Delta=\Delta_1$ Remark It follows from above property that if $\mathrm{A}$ is a square matrix, then $\operatorname{det}(A)=\operatorname{det}\left(A^{\prime}\right)$, where $A^{\prime}=$ transpose of $A$.

Note If $\mathrm{R}_i=i$ th row and $\mathrm{C}_i=i$ th column, then for interchange of row and columns, we will symbolically write $\mathrm{C}_i \leftrightarrow \mathrm{R}_i$ Let us verify the above property by example.

Remarks

(i) By this property, we can take out any common factor from any one row or any one column of a given determinant.

(ii) If corresponding elements of any two rows (or columns) of a determinant are proportional (in the same ratio), then its value is zero. For example $\Delta=\left|\begin{array}{ccc}a_1 & a_2 & a_3 \\ b_1 & b_2 & b_3 \\ k a_1 & k a_2 & k a_3\end{array}\right|=0$ (rows $\mathrm{R}_1$ and $\mathrm{R}_2$ are proportional)

Remarks

(i) If $\Delta_1$ is the determinant obtained by applying $\mathrm{R}_i \rightarrow k \mathrm{R}_i$ or $\mathrm{C}_i \rightarrow k \mathrm{C}_i$ to the determinant $\Delta$, then $\Delta_1=k \Delta$. (ii) If more than one operation like $\mathrm{R}_i \rightarrow \mathrm{R}_i+k \mathrm{R}_j$ is done in one step, care should be taken to see that a row that is affected in one operation should not be used in another operation. A similar remark applies to column operations.

4.3 Area of a Triangle

In earlier classes, we have studied that the area of a triangle whose vertices are $(x_1, y_1),(x_2, y_2)$ and $(x_3, y_3)$, is given by the expression $\frac{1}{2}[x_1(y_2-y_3)+x_2(y_3-y_1)+.$ $.x_3(y_1-y_2)]$. Now this expression can be written in the form of a determinant as

$ \Delta=\frac{1}{2}\begin{vmatrix} x_1 & y_1 & 1 \\ x_2 & y_2 & 1 \\ x_3 & y_3 & 1 \end{vmatrix} $

Remarks

(i) Since area is a positive quantity, we always take the absolute value of the determinant in (1).

(ii) If area is given, use both positive and negative values of the determinant for calculation.

(iii) The area of the triangle formed by three collinear points is zero.

4.4 Minors and Cofactors

In this section, we will learn to write the expansion of a determinant in compact form using minors and cofactors.

Definition 1 Minor of an element $a _{i j}$ of a determinant is the determinant obtained by deleting its $i$ th row and $j$ th column in which element $a _{i j}$ lies. Minor of an element $a _{i j}$ is denoted by $M _{i j}$.

Remark Minor of an element of a determinant of order $n(n \geq 2)$ is a determinant of order $n-1$.

Definition 2 Cofactor of an element $a _{i j}$, denoted by $A _{i j}$ is defined by

$ A _{i j}=(-1)^{i+j} M _{i j} \text{, where } M _{i j} \text{ is minor of } a _{i j} \text{. } $

Remark Expanding the determinant $\Delta$, in Example 21 , along $R_1$, we have

$ \begin{aligned} \Delta & =(-1)^{1+1} a _{11}\begin{vmatrix} a _{22} & a _{23} \\ a _{32} & a _{33} \end{vmatrix}+(-1)^{1+2} a _{12}\begin{vmatrix} a _{21} & a _{23} \\ a _{31} & a _{33} \end{vmatrix}+(-1)^{1+3} a _{13}\begin{vmatrix} a _{21} & a _{22} \\ a _{31} & a _{32} \end{vmatrix} \\ & =a _{11} A _{11}+a _{12} A _{12}+a _{13} A _{13} \text{, where } A _{i j} \text{ is cofactor of } a _{i j} \\ & =\text{ sum of product of elements of } R_1 \text{ with their corresponding cofactors } \end{aligned} $

Similarly, $\Delta$ can be calculated by other five ways of expansion that is along $R_2, R_3$, $C_1, C_2$ and $C_3$.

Hence $\Delta$ = sum of the product of elements of any row (or column) with their corresponding cofactors.

Note If elements of a row (or column) are multiplied with cofactors of any other row (or column), then their sum is zero. For example,

$ \begin{aligned} \Delta & =a _{11} A _{21}+a _{12} A _{22}+a _{13} A _{23} \\ & =a _{11}(-1)^{1+1}\begin{vmatrix} a _{12} & a _{13} \\ a _{32} & a _{33} \end{vmatrix}+a _{12}(-1)^{1+2}\begin{vmatrix} a _{11} & a _{13} \\ a _{31} & a _{33} \end{vmatrix}+a _{13}(-1)^{1+3}\begin{vmatrix} a _{11} & a _{12} \\ a _{31} & a _{32} \end{vmatrix} \\ & =\begin{vmatrix} a _{11} & a _{12} & a _{13} \\ a _{11} & a _{12} & a _{13} \\ a _{31} & a _{32} & a _{33} \end{vmatrix}=0 \text{ since } R_1 \text{ and } R_2 \text{ are identical } \end{aligned} $

Similarly, we can try for other rows and columns.

4.5 Adjoint and Inverse of a Matrix

In the previous chapter, we have studied inverse of a matrix. In this section, we shall discuss the condition for existence of inverse of a matrix.

To find inverse of a matrix A, i.e., $A^{-1}$ we shall first define adjoint of a matrix.

4.5.1 Adjoint of a matrix

Definition 3 The adjoint of a square matrix $A=[a _{i j}] _{n \times n}$ is defined as the transpose of the matrix $[A _{i j}] _{n \times n}$, where $A _{i j}$ is the cofactor of the element $a _{i j}$. Adjoint of the matrix A is denoted by adj $A$.

Let

$ A=\begin{vmatrix} a _{11} & a _{12} & a _{13} \\ a _{21} & a _{22} & a _{23} \\ a _{31} & a _{32} & a _{33} \end{vmatrix} $

Then $\quad adj A=$ Transpose of $\begin{vmatrix}A _{11} & A _{12} & A _{13} \\ A _{21} & A _{22} & A _{23} \\ A _{31} & A _{32} & A _{33}\end{vmatrix}=\begin{vmatrix}A _{11} & A _{21} & A _{31} \\ A _{12} & A _{22} & A _{32} \\ A _{13} & A _{23} & A _{33}\end{vmatrix}$

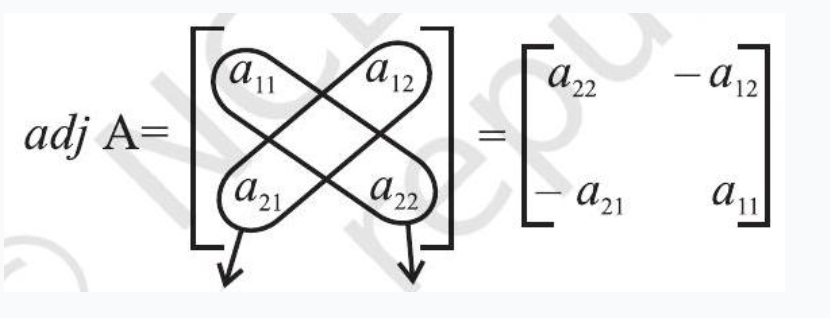

Remark For a square matrix of order 2, given by

$ A=\begin{vmatrix} a _{11} & a _{12} \\ a _{21} & a _{22} \end{vmatrix} $

The $a d j$ A can also be obtained by interchanging $a _{11}$ and $a _{22}$ and by changing signs of $a _{12}$ and $a _{21}$, i.e.,

Change sign Interchange

We state the following theorem without proof.

Theorem 1 If $A$ be any given square matrix of order $n$, then

$ A(adj A)=(adj A) A=|A| I, $

where I is the identity matrix of order $n$

Verification

Let $\quad A=\begin{vmatrix}a _{11} & a _{12} & a _{13} \\ a _{21} & a _{22} & a _{23} \\ a _{31} & a _{32} & a _{33}\end{vmatrix}$, then $adj A=\begin{vmatrix}A _{11} & A _{21} & A _{31} \\ A _{12} & A _{22} & A _{32} \\ A _{13} & A _{23} & A _{33}\end{vmatrix}$

Since sum of product of elements of a row (or a column) with corresponding cofactors is equal to $|A|$ and otherwise zero, we have

$ A(adj A)=\begin{vmatrix} |A| & 0 & 0 \\ 0 & |A| & 0 \\ 0 & 0 & |A| \end{vmatrix}=|A\begin{vmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{vmatrix}=|A| I $

Similarly, we can show $(adj A) A=|A| I$

Hence $A(adj A)=(adj A) A=|A| I$

Definition 4 A square matrix $A$ is said to be singular if $|A|=0$.

For example, the determinant of matrix $A=\begin{vmatrix}1 & 2 \\ 4 & 8\end{vmatrix}$ is zero

Hence $A$ is a singular matrix.

Definition 5 A square matrix $A$ is said to be non-singular if $|A| \neq 0$

Let

$ A=\begin{vmatrix} 1 & 2 \\ 3 & 4 \end{vmatrix} . \text{ Then }|A|=\begin{vmatrix} 1 & 2 \\ 3 & 4 \end{vmatrix}=4-6=-2 \neq 0 $

Hence $A$ is a nonsingular matrix

We state the following theorems without proof.

Theorem 2 If $A$ and $B$ are nonsingular matrices of the same order, then $AB$ and $BA$ are also nonsingular matrices of the same order.

Theorem 3 The determinant of the product of matrices is equal to product of their respective determinants, that is, $|AB|=|A||B|$, where $A$ and $B$ are square matrices of the same order

Remark We know that (adj A) A= $ \begin{vmatrix} |A| & 0 & 0\\ 0 & |A| & 0\\ 0 & 0 & |A| \end{vmatrix} $

Writing determinants of matrices on both sides, we have

$ |(adj A) A|=\begin{vmatrix} |A| & 0 & 0 \\ 0 & |A| & 0 \\ 0 & 0 & |A| \end{vmatrix} $ i.e.

$ |(adj A)||A|=|A|^{3}\begin{vmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{vmatrix} $ i.e.

$ |(adj A)||A|=|A|^{3}(1) $ i.e.

$ |(adj A)|=|A|^{2} $

In general, if $A$ is $a$ square matrix of order $n$, then $|adj(A)|=|A|^{n-1}$.

Theorem 4 A square matrix $A$ is invertible if and only if $A$ is nonsingular matrix.

Proof Let A be invertible matrix of order $n$ and I be the identity matrix of order $n$.

Then, there exists a square matrix $B$ of order $n$ such that $AB=BA=I$

Now

$ AB=I \text{. So }|AB|=|I| \text{ or }|A||B|=1 \quad \text{ (since }|I|=1,|AB|=|A||B| \text{ ) } $

This gives $\quad|A| \neq 0$. Hence $A$ is nonsingular.

Conversely, let A be nonsingular. Then $|A| \neq 0$

Now

$ A(adj A)=(adj A) A=|A| I $

(Theorem 1)

or

$ A(\frac{1}{|A|} adj A)=(\frac{1}{|A|} adj A) A=I $

or

$ AB=BA=I \text{, where } B=\frac{1}{|A|} adj A $

Thus

$A$ is invertible and $A^{-1}=\frac{1}{|A|}$ adj $A$

$ 133 $

4.6 Applications of Determinants and Matrices

In this section, we shall discuss application of determinants and matrices for solving the system of linear equations in two or three variables and for checking the consistency of the system of linear equations.

Consistent system A system of equations is said to be consistent if its solution (one or more) exists.

Inconsistent system A system of equations is said to be inconsistent if its solution does not exist.

Note In this chapter, we restrict ourselves to the system of linear equations having unique solutions only.

4.6.1 Solution of system of linear equations using inverse of a matrix

Let us express the system of linear equations as matrix equations and solve them using inverse of the coefficient matrix.

Consider the system of equations

$ \begin{gathered} a_1 x+b_1 y+c_1 z=d_1 \\ a_2 x+b_2 y+c_2 z=d_2 \\ a_3 x+b_3 y+c_3 z=d_3 \\ \text{ Let } \quad A=\begin{vmatrix} a_1 & b_1 & c_1 \\ a_2 & b_2 & c_2 \\ a_3 & b_3 & c_3 \end{vmatrix}, X=\begin{vmatrix} x \\ y \\ z \end{vmatrix} \text{ and } B=\begin{vmatrix} d_1 \\ d_2 \\ d_3 \end{vmatrix} \end{gathered} $

Then, the system of equations can be written as, $AX=B$, i.e.,

$ \begin{vmatrix} a_1 & b_1 & c_1 \\ a_2 & b_2 & c_2 \\ a_3 & b_3 & c_3 \end{vmatrix}\begin{vmatrix} x \\ y \\ z \end{vmatrix}=\begin{vmatrix} d_1 \\ d_2 \\ d_3 \end{vmatrix} $

Case I If $A$ is a nonsingular matrix, then its inverse exists. Now

$ A X=B $

or

$ \begin{aligned} A^{-1}(AX) & =A^{-1} B \\ (A^{-1} A) X & =A^{-1} B \\ IX & =A^{-1} B \\ X & =A^{-1} B \end{aligned} $ (premultiplying by A–1) (by associative property) $ \text{ or } $

or

This matrix equation provides unique solution for the given system of equations as inverse of a matrix is unique. This method of solving system of equations is known as Matrix Method.

Case II If $A$ is a singular matrix, then $|A|=0$.

In this case, we calculate $(adj A) B$.

If (adj A) B $\neq O$, ( $O$ being zero matrix), then solution does not exist and the system of equations is called inconsistent.

If $(adj A) B=O$, then system may be either consistent or inconsistent according as the system have either infinitely many solutions or no solution.

Summary

$\Delta $ Determinant of a matrix $A=[a _{11}] _{1 \times 1}$ is given by $|a _{11}|=a _{11}$

$\Delta $ Determinant of a matrix $A=\begin{vmatrix}a _{11} & a _{12} \\ a _{21} & a _{22}\end{vmatrix}$ is given by

$ |A|=\begin{vmatrix} a _{11} & a _{12} \\ a _{21} & a _{22} \end{vmatrix}=a _{11} a _{22}-a _{12} a _{21} $

$ a_1 \quad b_1 \quad c_1 $

$\Delta $ Determinant of a matrix $A=a_2 \quad b_2 \quad c_2$ is given by (expanding along $R_1$ )

$ \begin{vmatrix} a_3 & b_3 & c_3 \end{vmatrix} $

$ |A|=\begin{vmatrix} a_1 & b_1 & c_1 \\ a_2 & b_2 & c_2 \\ a_3 & b_3 & c_3 \end{vmatrix}=a_1\begin{vmatrix} b_2 & c_2 \\ b_3 & c_3 \end{vmatrix}-b_1\begin{vmatrix} a_2 & c_2 \\ a_3 & c_3 \end{vmatrix}+c_1\begin{vmatrix} a_2 & b_2 \\ a_3 & b_3 \end{vmatrix} $

For any square matrix $\mathbf{A}$, the $|\mathbf{A}|$ satisfy following properties.

Area of a triangle with vertices $(x_1, y_1),(x_2, y_2)$ and $(x_3, y_3)$ is given by

$ \Delta=\frac{1}{2}\begin{vmatrix} x_1 & y_1 & 1 \\ x_2 & y_2 & 1 \\ x_3 & y_3 & 1 \end{vmatrix} $

- Minor of an element $a _{i j}$ of the determinant of matrix A is the determinant obtained by deleting $i^{i^{h}}$ row and $j^{\text{th }}$ column and denoted by $M _{i j}$.

Cofactor of $a _{i j}$ of given by $A _{i j}=(-1)^{i+j} M _{i j}$

- Value of determinant of a matrix A is obtained by sum of product of elements of a row (or a column) with corresponding cofactors. For example,

$ |A|=a _{11} A _{11}+a _{12} A _{12}+a _{13} A _{13} . $

- If elements of one row (or column) are multiplied with cofactors of elements of any other row (or column), then their sum is zero. For example, $a _{11} A _{21}+a _{12}$ $A _{22}+a _{13} A _{23}=0$

If $A=\begin{vmatrix}a _{11} & a _{12} & a _{13} \\ a _{21} & a _{22} & a _{23} \\ a _{31} & a _{32} & a _{33}\end{vmatrix}$, then $adj A=\begin{vmatrix}A _{11} & A _{21} & A _{31} \\ A _{12} & A _{22} & A _{32} \\ A _{13} & A _{23} & A _{33}\end{vmatrix}$, where $A _{i j}$ is cofactor of $a _{i j}$

- $A(adj A)=(adj A) A=|A| I$, where $A$ is square matrix of order $n$.

- A square matrix A is said to be singular or non-singular according as $|A|=0$ or $|A| \neq 0$.

- If $AB=BA=I$, where $B$ is square matrix, then $B$ is called inverse of $A$. Also $A^{-1}=B$ or $B^{-1}=A$ and hence $(A^{-1})^{-1}=A$.

- A square matrix $A$ has inverse if and only if $A$ is non-singular.

$A^{-1}=\frac{1}{|A|}(adj A)$

$\checkmark$ If $a_1 x+b_1 y+c_1 z=d_1$

$ \begin{aligned} & a_2 x+b_2 y+c_2 z=d_2 \\ & a_3 x+b_3 y+c_3 z=d_3, \end{aligned} $

then these equations can be written as $AX=B$, where

$ A=\begin{vmatrix} a_1 & b_1 & c_1 \\ a_2 & b_2 & c_2 \\ a_3 & b_3 & c_3 \end{vmatrix}, X=\begin{vmatrix}{l} x \\ y \\ z \end{vmatrix} \text{ and } B=\begin{vmatrix}{l} d_1 \\ d_2 \\ d_3 \end{vmatrix} $

- Unique solution of equation $A X=B$ is given by $X=A^{-1} B$, where $|A| \neq 0$.

- A system of equation is consistent or inconsistent according as its solution exists or not.

- For a square matrix $A$ in matrix equation $AX=B$

(i) $|A| \neq 0$, there exists unique solution

(ii) $|A|=0$ and $(adj A) B \neq 0$, then there exists no solution

(iii) $|A|=0$ and $(adj A) B=0$, then system may or may not be consistent.

Historical Note

The Chinese method of representing the coefficients of the unknowns of several linear equations by using rods on a calculating board naturally led to the discovery of simple method of elimination. The arrangement of rods was precisely that of the numbers in a determinant. The Chinese, therefore, early developed the idea of subtracting columns and rows as in simplification of a determinant Mikami, China, pp 30, 93.

Seki Kowa, the greatest of the Japanese Mathematicians of seventeenth century in his work ‘Kai Fukudai no Ho’ in 1683 showed that he had the idea of determinants and of their expansion. But he used this device only in eliminating a quantity from two equations and not directly in the solution of a set of simultaneous linear equations. T. Hayashi, “The Fakudoi and Determinants in Japanese Mathematics,” in the proc. of the Tokyo Math. Soc., V.

Vendermonde was the first to recognise determinants as independent functions. He may be called the formal founder. Laplace (1772), gave general method of expanding a determinant in terms of its complementary minors. In 1773 Lagrange treated determinants of the second and third orders and used them for purpose other than the solution of equations. In 1801, Gauss used determinants in his theory of numbers.

The next great contributor was Jacques - Philippe - Marie Binet, (1812) who stated the theorem relating to the product of two matrices of $m$-columns and $n$ rows, which for the special case of $m=n$ reduces to the multiplication theorem.

Also on the same day, Cauchy (1812) presented one on the same subject. He used the word ‘determinant’ in its present sense. He gave the proof of multiplication theorem more satisfactory than Binet’s.

The greatest contributor to the theory was Carl Gustav Jacob Jacobi, after this the word determinant received its final acceptance.